Heron is the direct successor of Apache Storm. From an architectural perspective it is markedly different from Storm but fully backwards compatible with it from an API perspective.

The sections below clarify the distinction between Heron and Storm, describe the design goals behind Heron, and explain major components of its architecture.

Codebase

A detailed guide to the Heron codebase can be found here.

Topologies

You can think of a Heron cluster as a mechanism for managing the lifecycle of stream-processing entities called topologies. More information can be found in the Heron Topologies document.

Relationship with Apache Storm

Heron is the direct successor of Apache Storm but built with two goals in mind:

- Overcoming Storm’s performance, reliability, and other shortcomings by replacing Storm’s thread-based computing model with a process-based model.

- Retaining full compatibility with Storm’s data model and topology API.

For a more in-depth discussion of Heron and Storm, see the Twitter Heron: Stream Processing at Scale paper.

Heron Design Goals

For a description of the principles that Heron was designed to fulfill, see Heron Design Goals.

Topology Components

The following core components of Heron topologies are discussed in depth in the sections below:

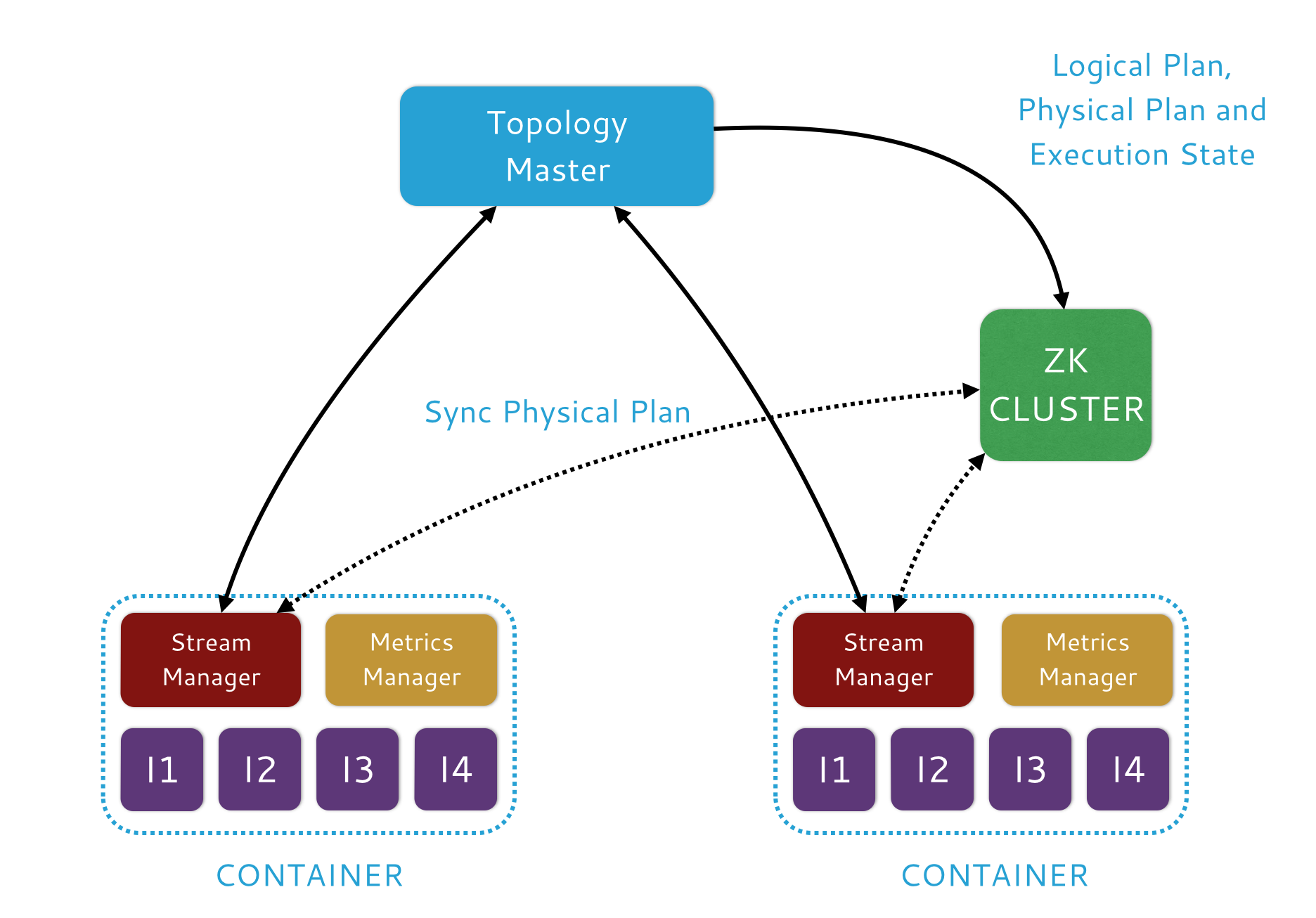

Topology Master

The Topology Master (TM) manages a topology throughout its entire lifecycle,

from the time it’s submitted until it’s ultimately killed. When heron deploys

a topology it starts a single TM and multiple containers.

The TM creates an ephemeral ZooKeeper node to

ensure that there’s only one TM for the topology and that the TM is easily

discoverable by any process in the topology. The TM also constructs the physical

plan for a topology which it relays to different

components.

Topology Master Configuration

TMs have a variety of configurable parameters that you can adjust at each phase of a topology’s lifecycle.

Container

Each Heron topology consists of multiple containers, each of which houses multiple Heron Instances, a Stream Manager, and a Metrics Manager. Containers communicate with the topology’s TM to ensure that the topology forms a fully connected graph.

For an illustration, see the figure in the Topology Master section above.

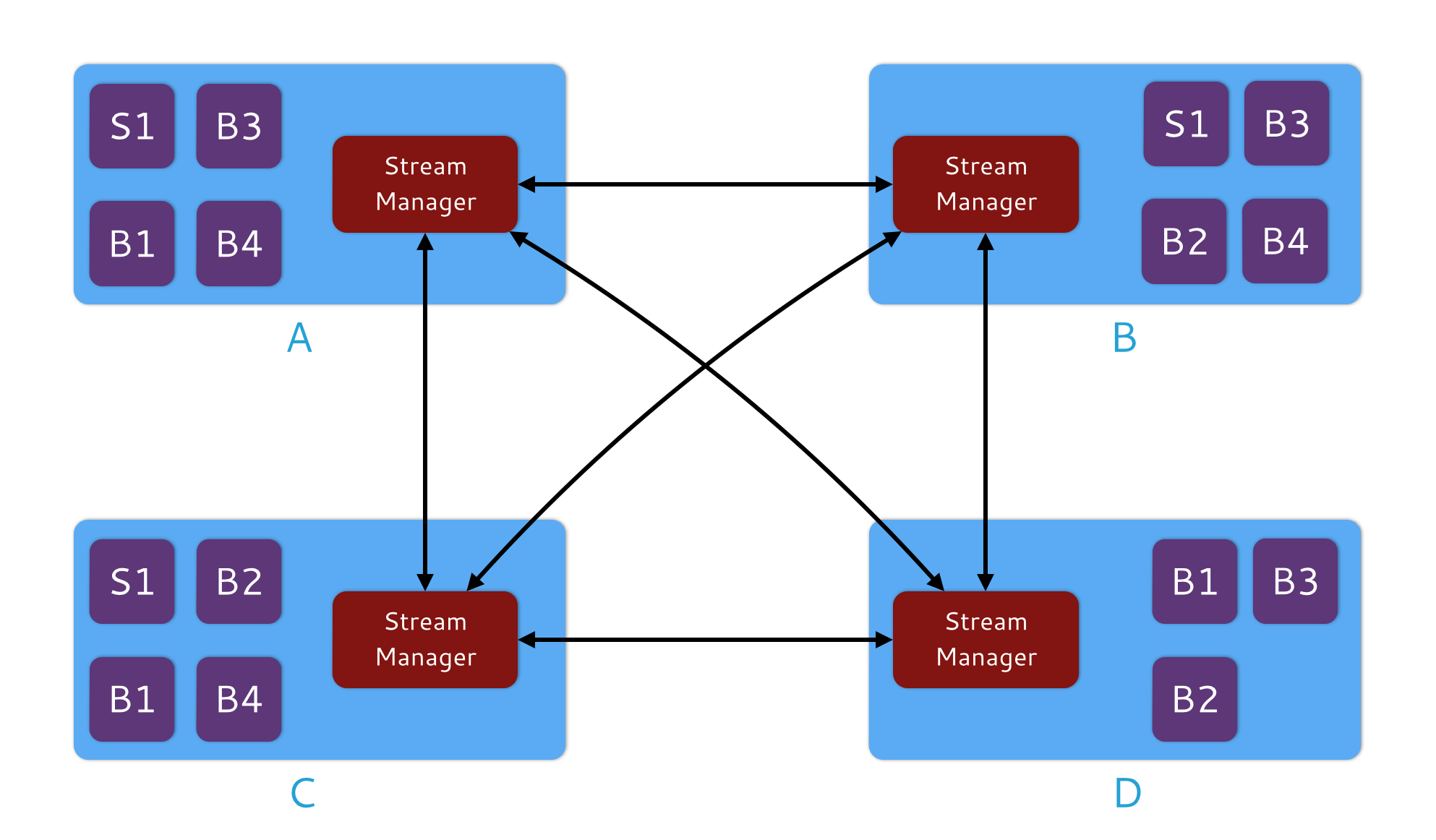

Stream Manager

The Stream Manager (SM) manages the routing of tuples between topology components. Each Heron Instance in a topology connects to its local SM, while all of the SMs in a given topology connect to one another to form a network. Below is a visual illustration of a network of SMs:

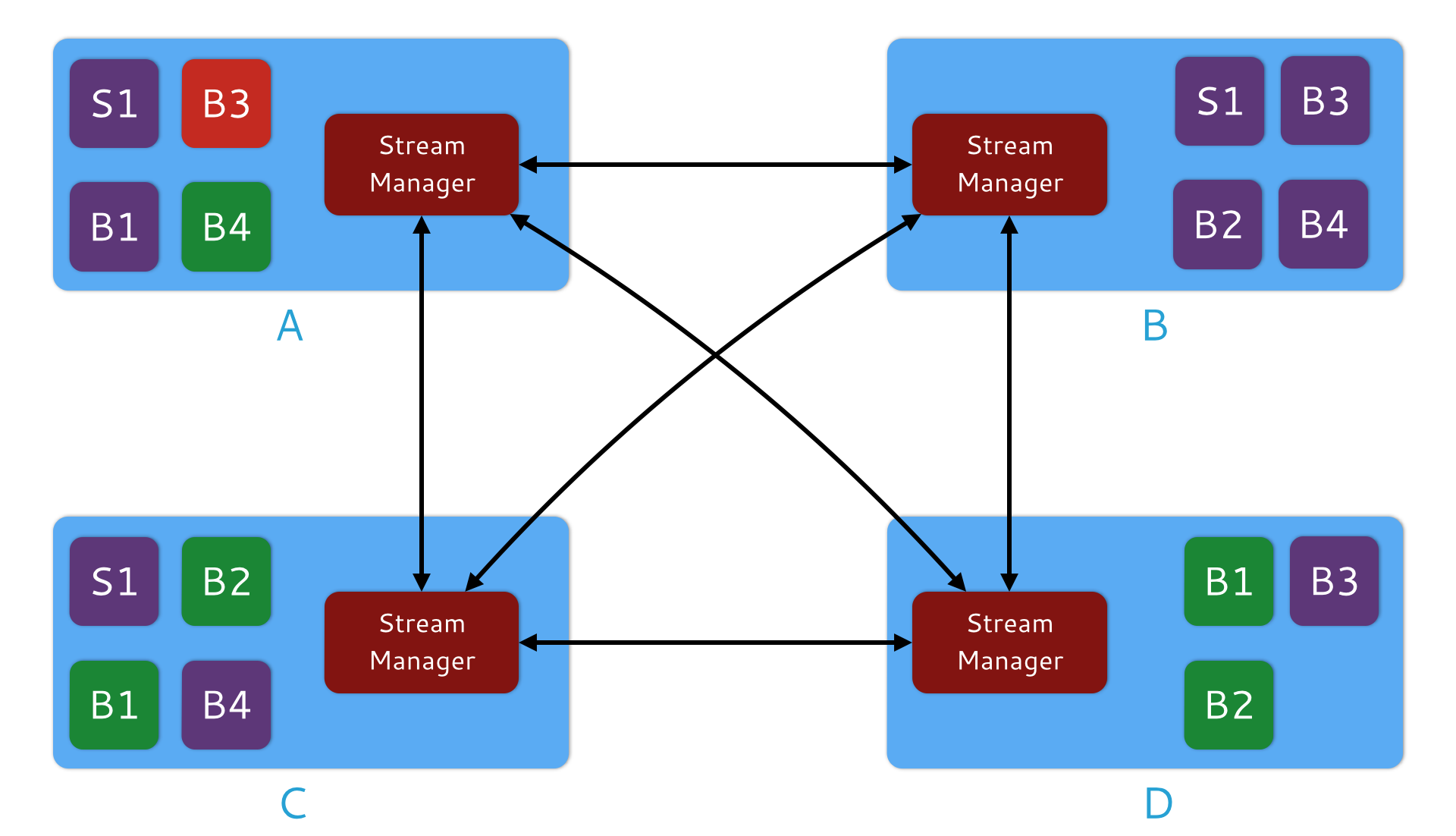

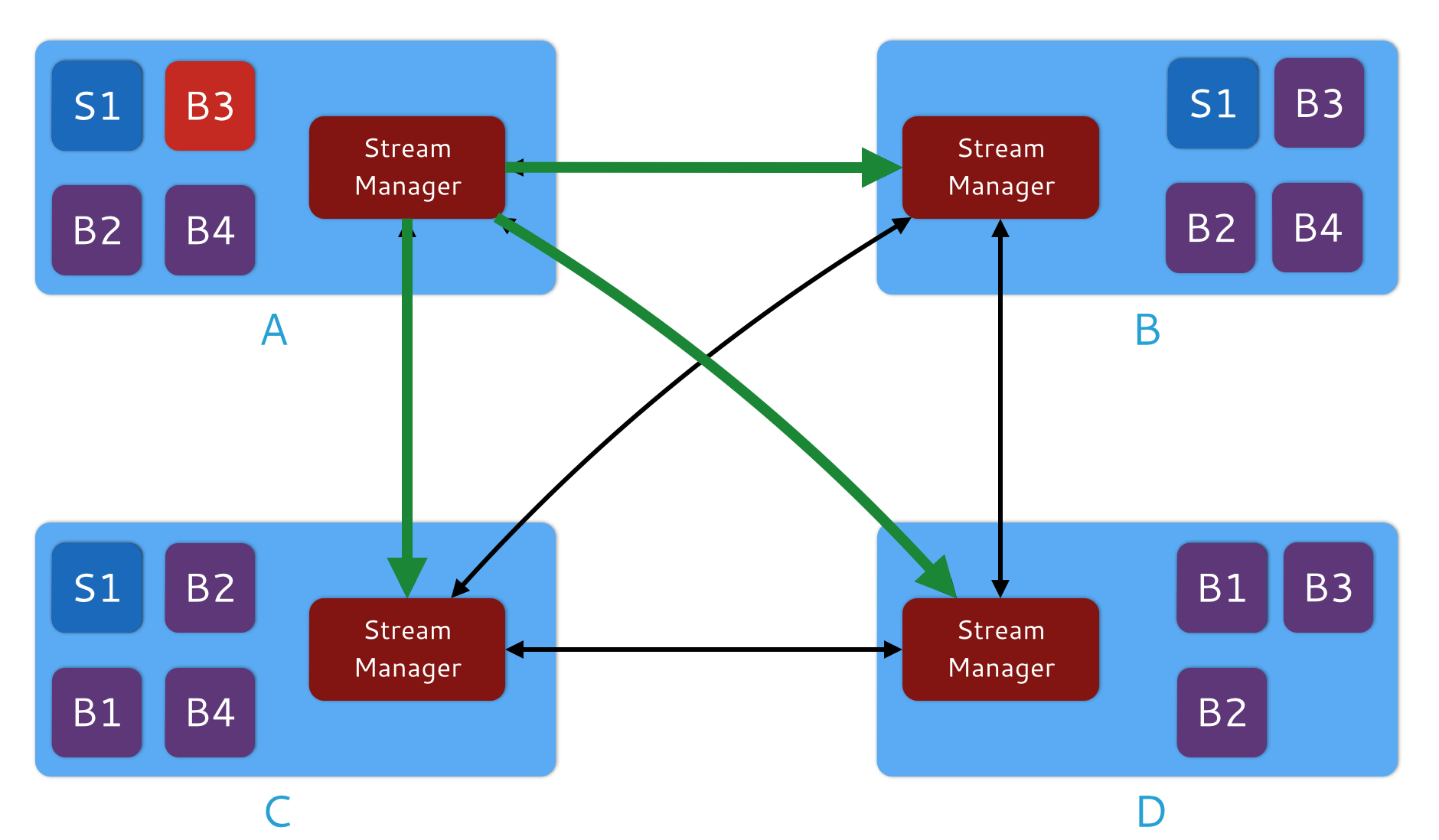

In addition to being a routing engine for data streams, SMs are responsible for propagating back pressure within the topology when necessary. Below is an illustration of back pressure:

In the diagram above, assume that bolt B3 (in container A) receives all of its inputs from spout S1. B3 is running more slowly than other components. In response, the SM for container A will refuse input from the SMs in containers C and D, which will lead to the socket buffers in those containers filling up, which could lead to throughput collapse.

In a situation like this, Heron’s back pressure mechanism will kick in. The SM in container A will send a message to all the other SMs. In response, the other SMs will examine the container’s physical plan and cut off inputs from spouts that feed bolt B3 (in this case spout S1).

Once the lagging bolt (B3) begins functioning normally, the SM in container A will notify the other SMs and stream routing within the topology will return to normal.

Stream Manger Configuration

SMs have a variety of configurable parameters that you can adjust at each phase of a topology’s lifecycle.

Heron Instance

A Heron Instance (HI) is a process that handles a single task of a spout or bolt, which allows for easy debugging and profiling.

Currently, Heron only supports Java, so all HIs are JVM processes, but this will change in the future.

Heron Instance Configuration

HIs have a variety of configurable parameters that you can adjust at each phase of a topology’s lifecycle.

Metrics Manager

Each topology runs a Metrics Manager (MM) that collects and exports metrics from all components in a container. It then routes those metrics to both the Topology Master and to external collectors, such as Scribe, Graphite, or analogous systems.

You can adapt Heron to support additional systems by implementing your own custom metrics sink.

Cluster-level Components

All of the components listed in the sections above can be found in each topology. The components listed below are cluster-level components that function outside of particular topologies.

Heron CLI

Heron has a CLI tool called heron that is used to manage topologies.

Documentation can be found in Managing

Topologies.

Heron Tracker

The Heron Tracker (or just Tracker) is a centralized gateway for cluster-wide information about topologies, including which topologies are running, being launched, being killed, etc. It relies on the same ZooKeeper nodes as the topologies in the cluster and exposes that information through a JSON REST API. The Tracker can be run within your Heron cluster (on the same set of machines managed by your Heron scheduler) or outside of it.

Instructions on running the tracker including JSON API docs can be found in Heron Tracker.

Heron UI

Heron UI is a rich visual interface that you can use to interact with topologies. Through Heron UI you can see color-coded visual representations of the logical and physical plan of each topology in your cluster.

For more information, see the Heron UI document.

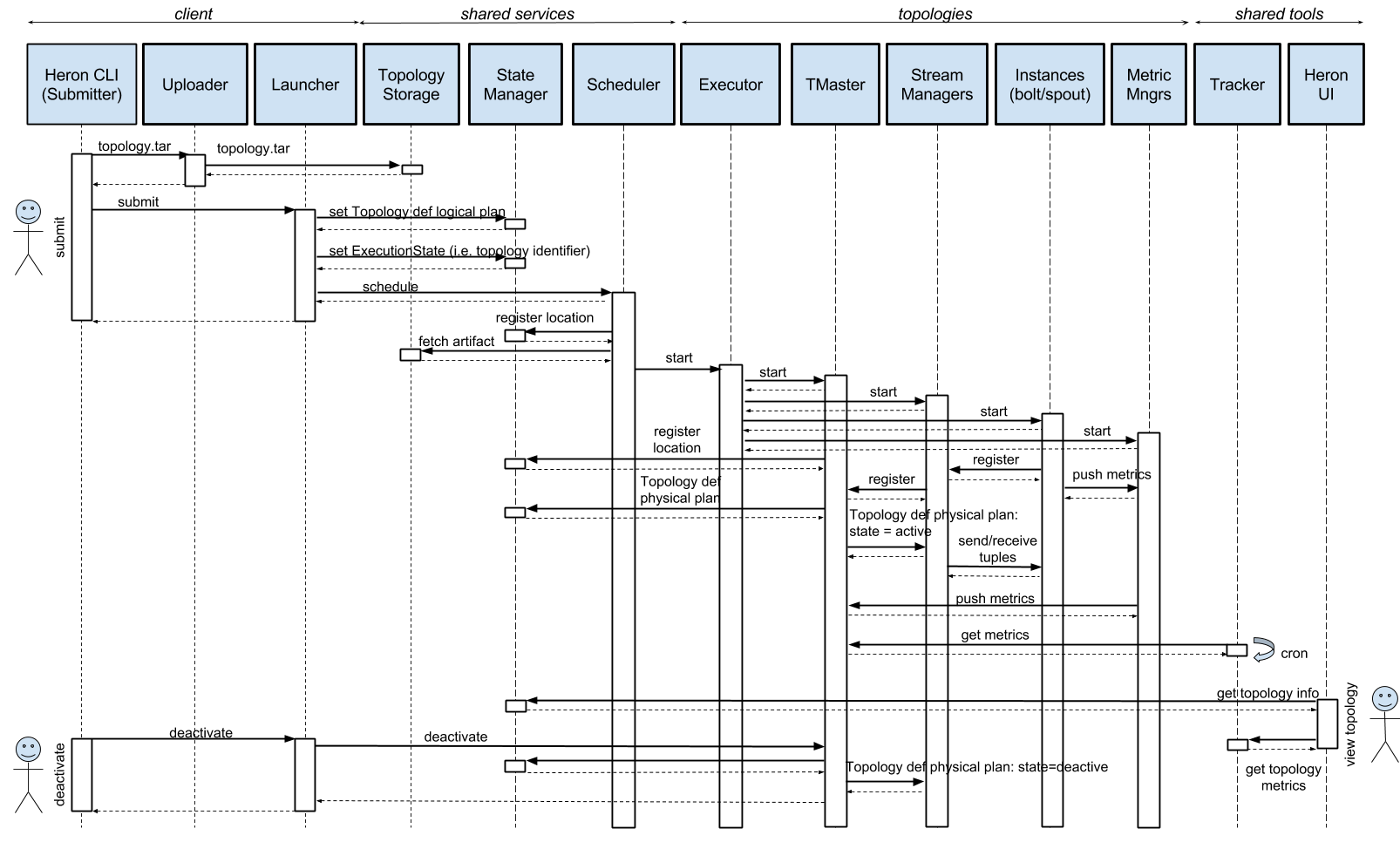

Topology Submit Sequence

Topology Lifecycle describes the lifecycle states of a Heron

topology. The diagram below illustrates the sequence of interactions amongst the Heron architectural

components during the submit and deactivate client actions. Additionally, the system interaction

while viewing a topology on the Heron UI is shown.

Topology Submit Description

The following describes in more detail how a topology is submitted and launched using local scheduler.

Client

When a topology is submitted using the

heron submitcommand, it first executes themainof the topology and creates a.defnfile containing the topology’s logical plan. Then, it runscom.twitter.heron.scheduler.SubmitterMain, which is responsible for invoking an uploader and a launcher for the topology. The uploader uploads the topology package to the given location, while the launcher registers the topology’s logical plan and executor state with the State Manager and invokes the main scheduler.Shared Services

When the main scheduler (

com.twitter.heron.scheduler.SchedulerMain) is invoked by the launcher, it fetches the submitted topology artifact from the topology storage, initializes the State Manager, and prepares a physical plan that specifies how multiple instances should be packed into containers. Then, it starts the specified scheduler, such ascom.twitter.heron.scheduler.local.LocalScheduler, which invokes theheron-executorfor each container.Topologies

heron-executorprocess is started for each container and is responsible for executing the Topology Master or Heron Instances (Bolt/Spout) that are assigned to the container. Note that the Topology Master is always executed on container 0. Whenheron-executorexecutes normal Heron Instances (i.e. except for container 0), it first prepares the Stream Manager and the Metrics Manager before startingcom.twitter.heron.instance.HeronInstancefor each instance that is assigned to the container.Heron Instance has two threads: the gateway thread and the slave thread. The gateway thread is mainly responsible for communicating with the Stream Manager and the Metrics Manager using

StreamManagerClientandMetricsManagerClientrespectively, as well as sending/receiving tuples to/from the slave thread. On the other hand, the slave thread runs either spout or bolt of the topology based on the physical plan.When a new Heron Instance is started, its

StreamManagerClientestablishes a connection and registers itself with the stream manager. After the successful registration, the gateway thread sends its physical plan to the slave thread, which then executes the assigned instance accordingly.